Yasaman Sheri

Designer + Director

I am designer & director leading and creating interfaces for new product categories utilizing emerging technologies. I think deeply about interaction design, interfaces to human-machine-nature and work with world renowned companies. My expertise are in Augmented & Mixed Reality, AI, Intelligent Materials and Sensors, Human-Machine Interface (HMI) for Autonomous Vehicles and as well as Biotechnology.

In addition to leading novel interactions, I am also faculty, teaching at CIID, Graduate Industrial Design at RISD and have been guest lecturer / visiting professor at Art Center College of Design, NYU Tandon School of Engineering, ZhDK, Parsons School of Design, UPenn, Stanford University, Rutgers University, University of Washington.

I was jury for IxDA Interaction Awards 2020 & Core77 Design Awards 2019. I gave the final graduation speech for Umeå Institute of Design, and am dedicated to mentoring, building teams and empowering designers & womxn. I have mentored XR teams at Mozilla, MIT AR VR hackathon, Mentored creative entrepreneurs at New INC, NASA Ames, Singularity University and am currently building the R&D Lab for Serpentine Galleries.

—

A selection of my work can be found below ↓

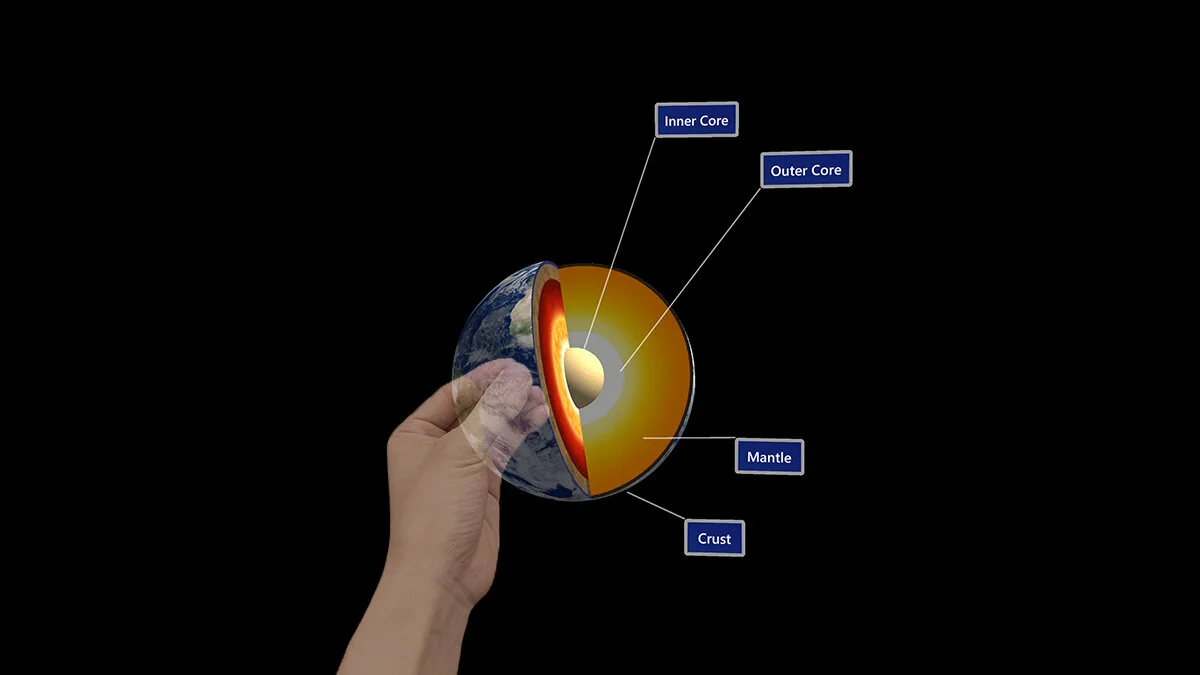

Gesture Interaction Model

Microsoft Hololens

2012-2017

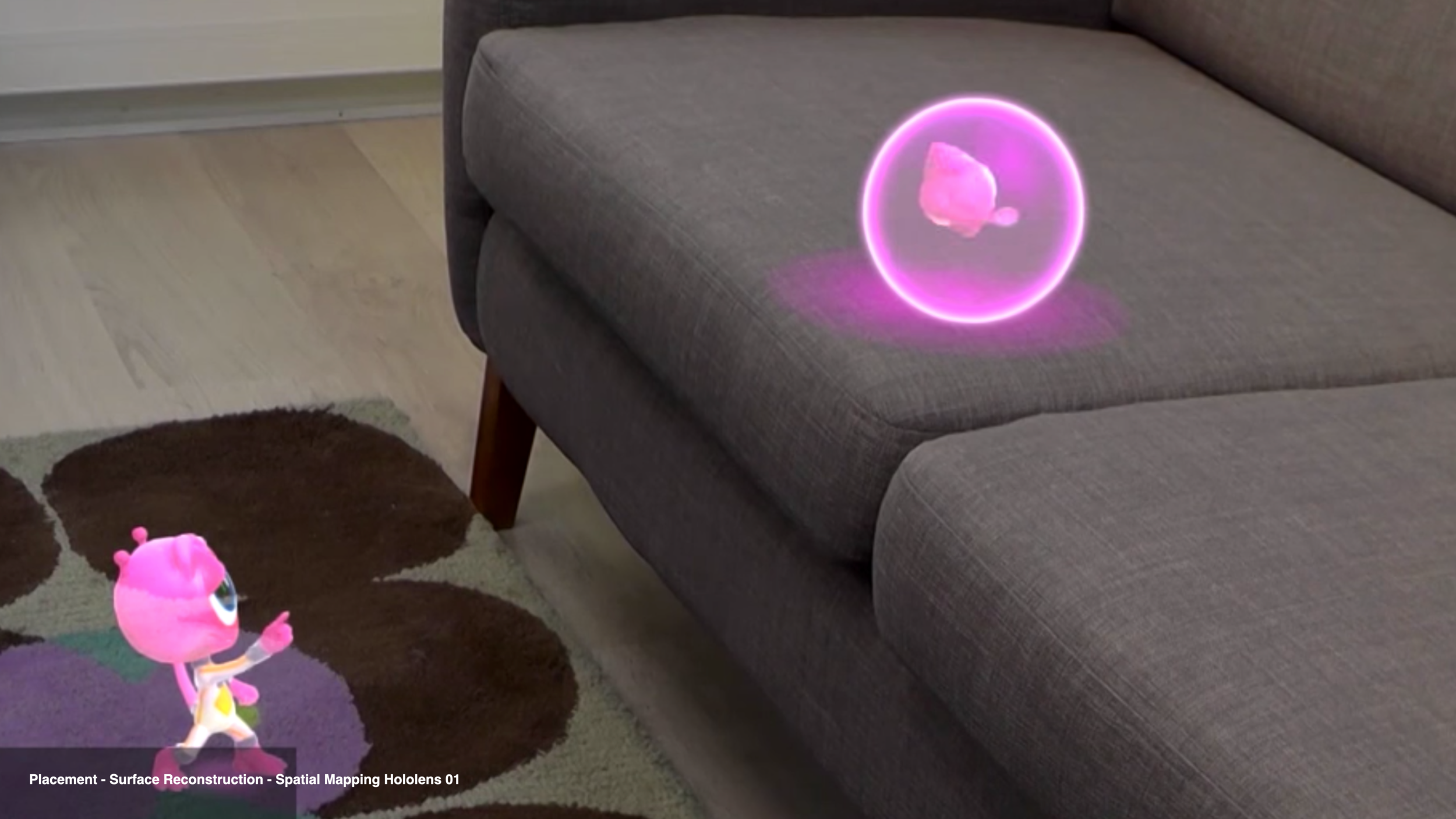

Design of all hologram manipulation including movement, scaling, rotation, scrolling, zooming, panning, body locking, body tagging, placement on surface reconstruction. 5 Patents awarded for novel gesture interaction for two handed gestural interaction, intuitive touch interface and fully articulated hand tracking applied in OS. One of the most important innovation in this space that I was responsible for was interaction paradigm for distant vs. direction interaction. Directly presenting prototypes, interaction models and design direction to Alex Kipman and Senior Leadership at Microsoft.

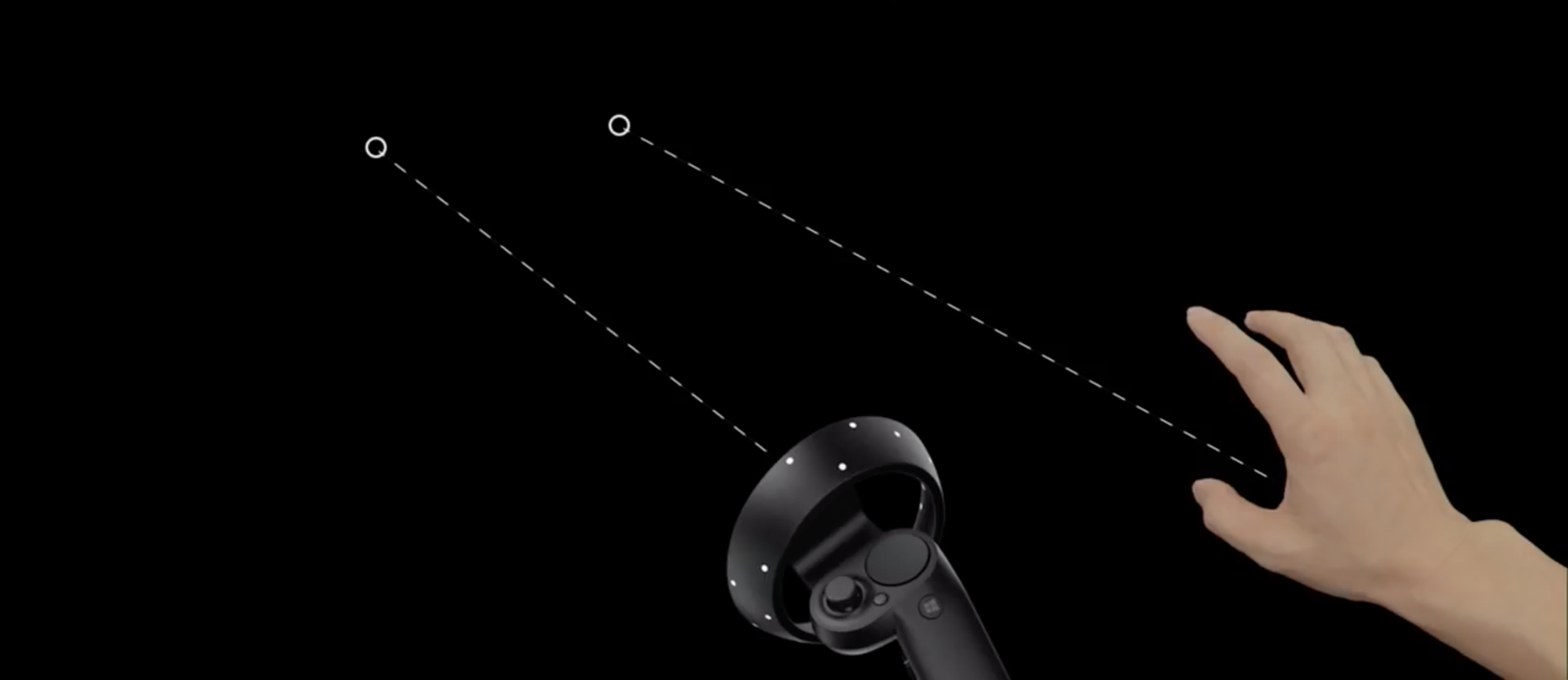

Cross Device MR Platform

Microsoft Mixed Reality

2016-2017

After the launch and shipping of Hololens, Microsoft Windows Holographic (Mixed Reality) moved on to cross device interaction. My work here was to not only think about Hololens Hardware AR headset inputs such as Gesture, Eye and Gaze input or Surface Reconstruction, but also to think about full virtuality of VR worlds with input devices such as 6-DOF, keyboard and game controller, teleportation.

4 classes of augmentation was defined: Human (including all bodily inputs), AI companion, Objects, Space. Crossing real to virtual and the mixes of the combination, created a challenging and fascinating opportunity for design of systems, platforms, interfaces and the micro interactions of the “in-betweens”.

Designing for consistency, while celebrating the differences of what each platform would offer, this part of the project was about the spectrum of virtuality across contexts.

In addition to directing design across devices and inputs, I was responsible for the “avatar” and “people” representation in online spaces, including social VR space. How are our bodies and intentions represented virtually and what interaction paradigms emerge as a result of online-together.

↑ Wall Magnetism on Surface Reconstruction

Biosensor Anthology

Ginkgo Bioworks

2018 — Ongoing

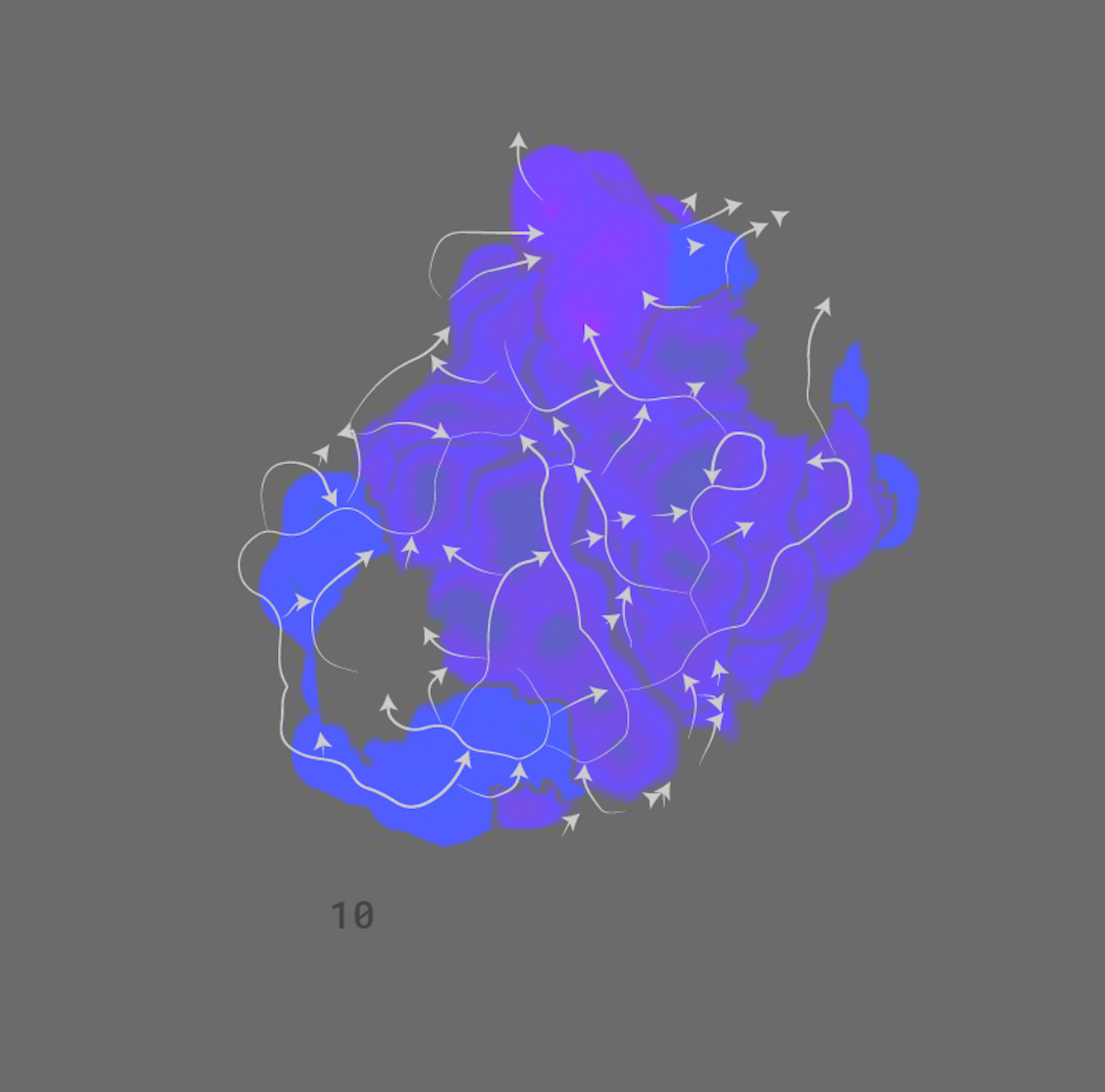

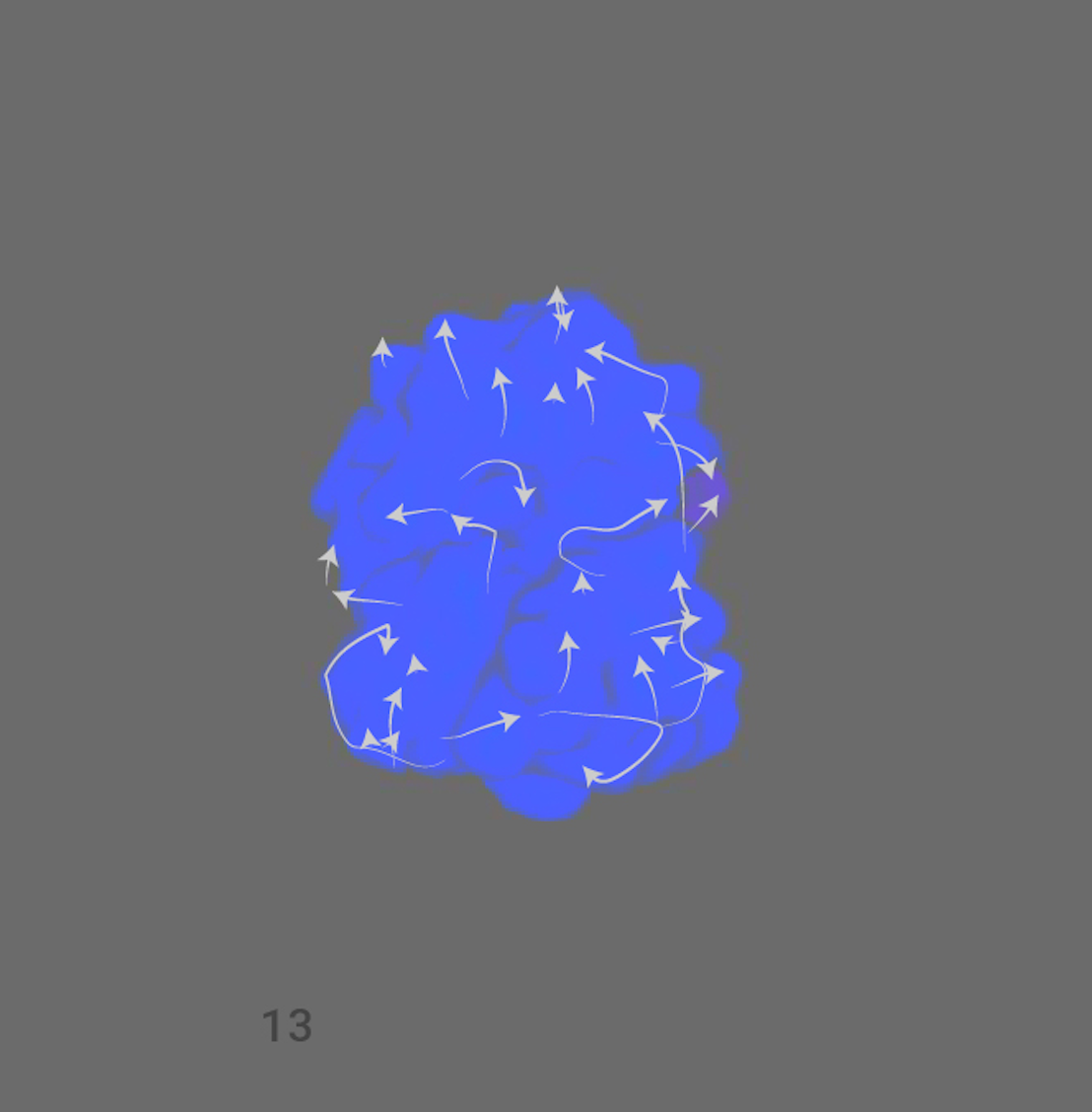

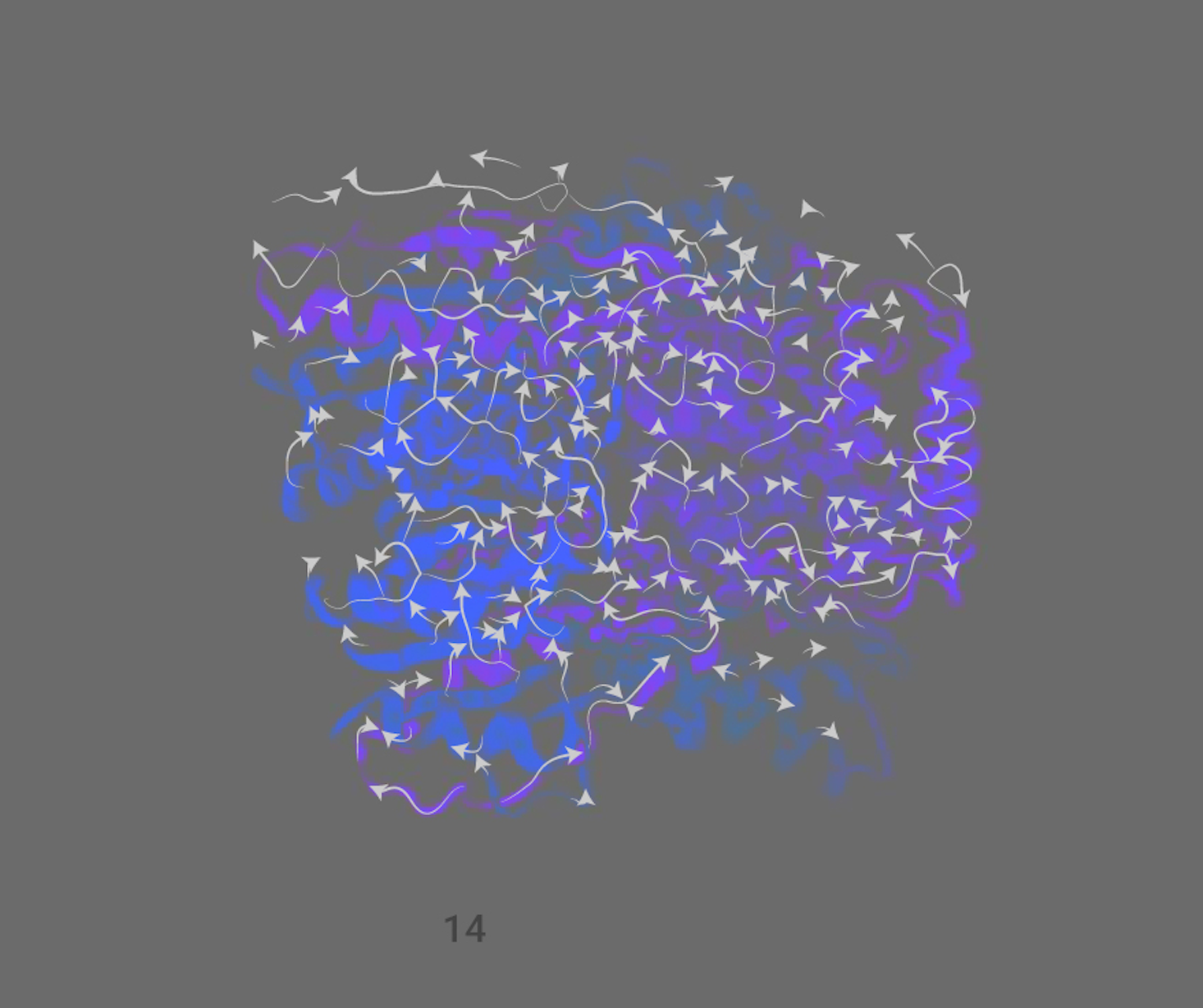

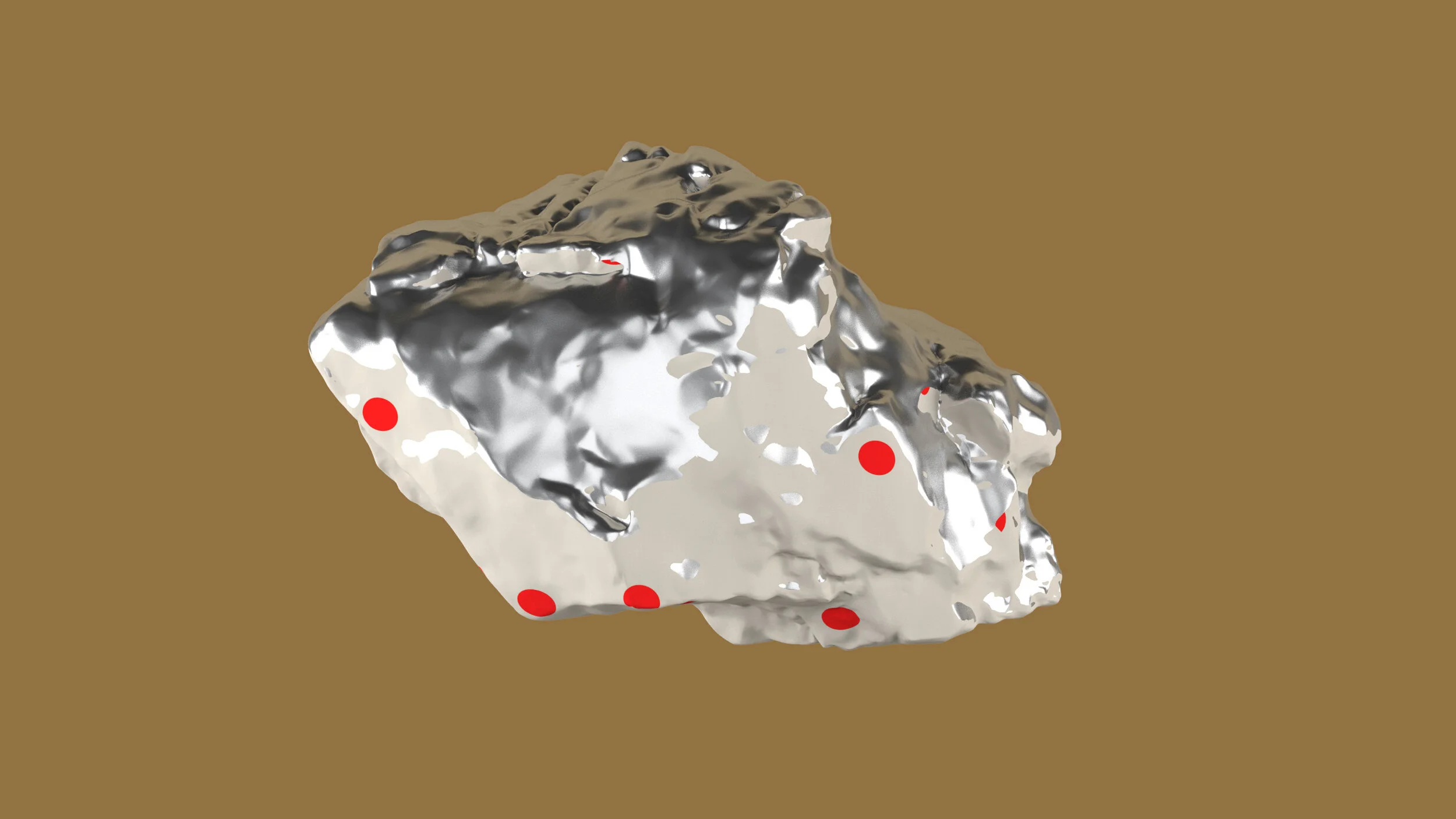

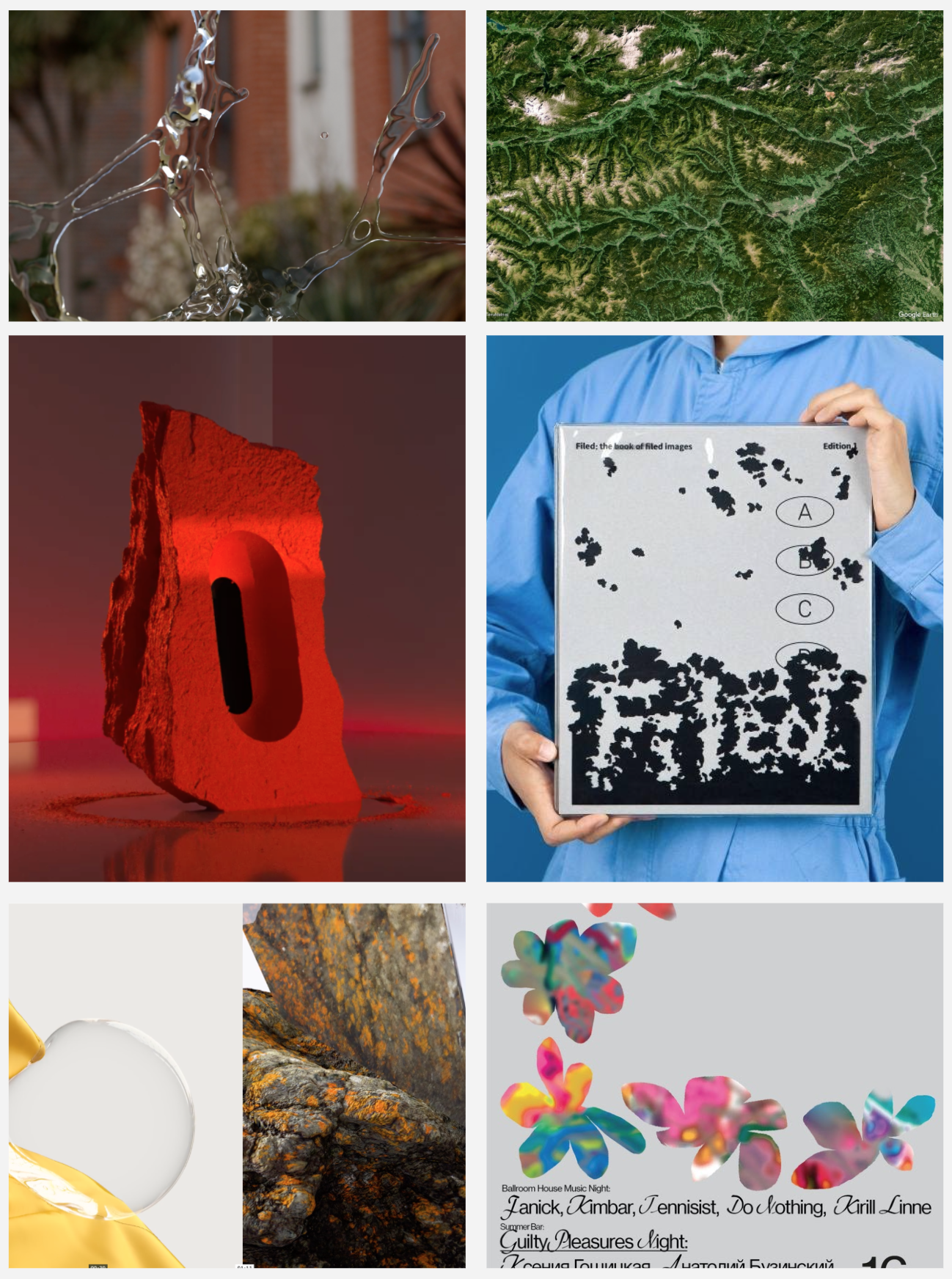

Biosensors are invisible to human perception and require scientific apparatus to view, capture, and interact with. The graphical visualizations below demonstrate the captured geometries of proteins whos structural shape aid in sensing variety of chemicals such as aroma and flavor molecules, toxins and hormones. Through on-going conversations with scientists and utilizing physical and digital scientific tools, the representation of these abstract sensors were collected as part of the project to demonstrate visibly, floureshing a common ground for communication in the world of arts and sciences.

↑ Anthology of collected geometries of different biological sensors

Pymol Protein Software was learned and used in order to visualize and create categorical reference for the Sensor Anthology. Using the science software and Protein Data Bank, an online open-source platform for accessing 3D protein data, the surface geometry and point cloud was collected and brought in to Visualization software such as Adobe Creative and C4D. The Visual Assets serve as a collection and data base of biosensors for scientists and designers to speak same language and make visible the invisible scales only seen with high tech instrumentations, often inaccessible to general public.

X, Moonshot Factory

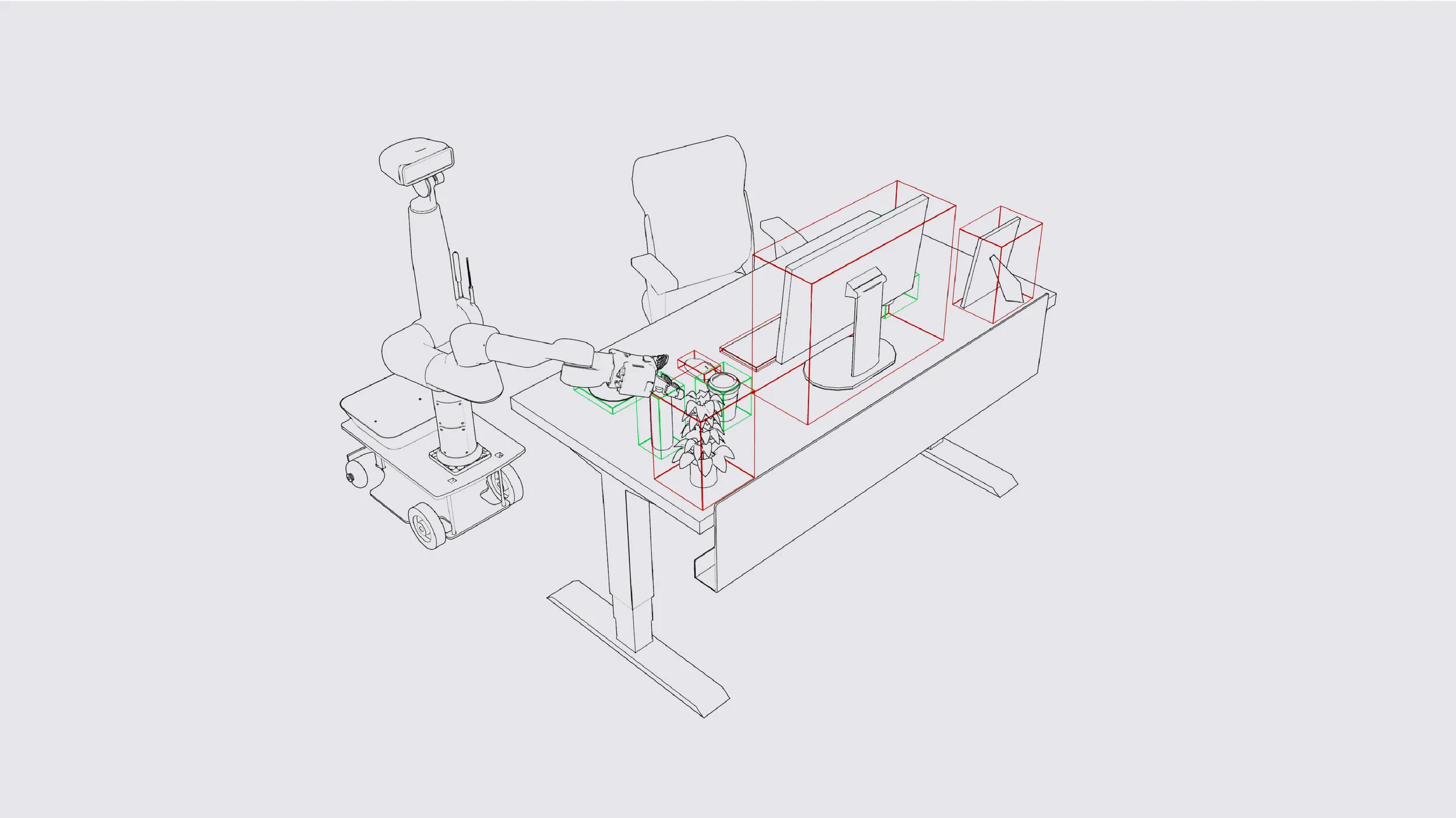

Autonomous Robotics Interface

2018

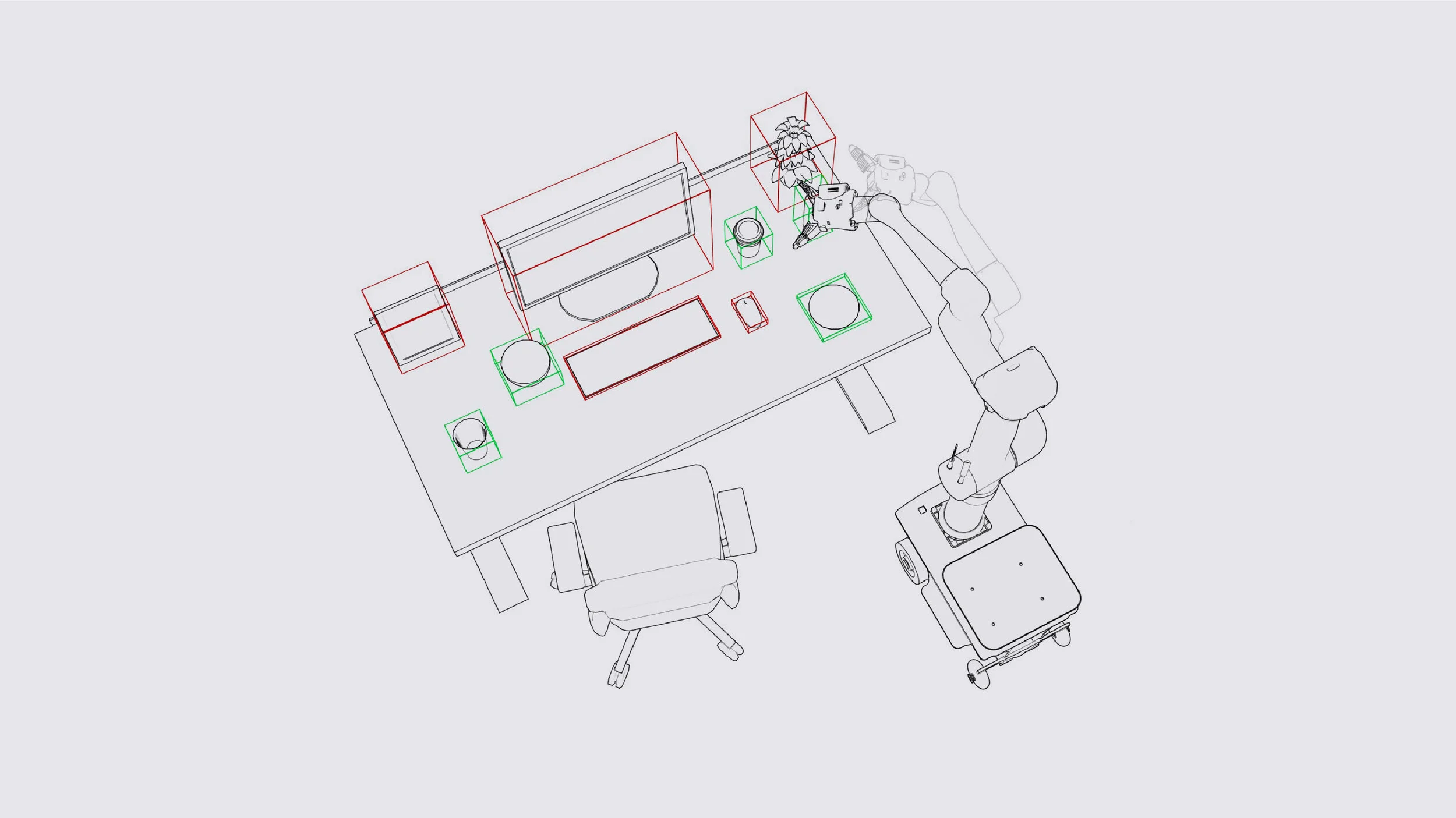

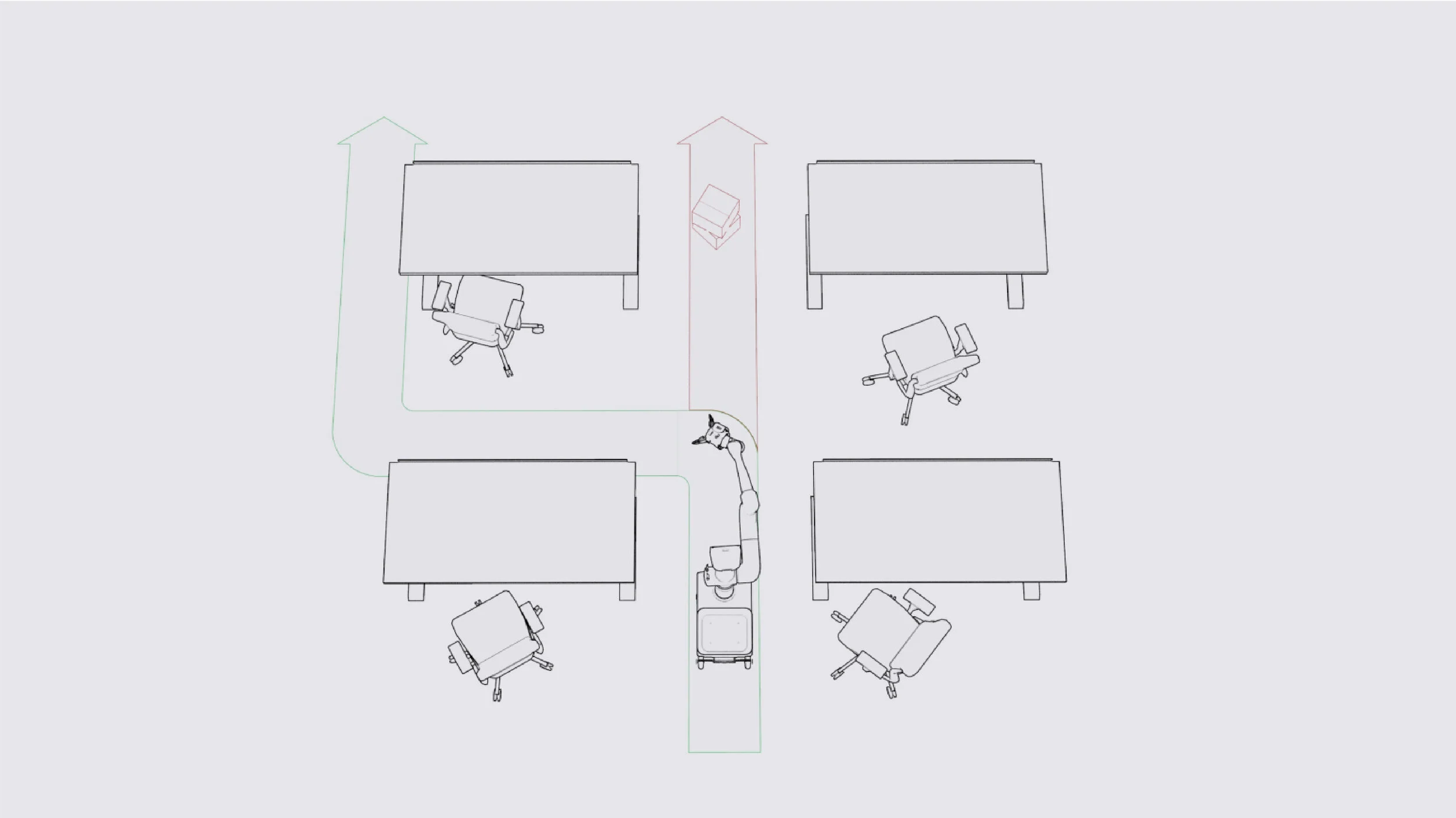

Working in the Core Design team at Google X, I primarily lead Interaction Design and Art Direction to a large startup-esque project in stealth mode focusing on robotics and autonomous vehicles of various kinds. I was the only designer on the project responsible for all up systems architecture, UX patterns, micro- interaction as well as the final visual assets for the Human Machine Interface (HMI) of self driving robotics, as well as fleet of robots. There was several different interfaces accommodating for remote, distant, near and touch interactions manifested both on-vehicle as well as remotely for long distance control.

I worked with “CEO” of the project directly to define and helps shape the importance of trust by highlighting importance of affordances and feedback mechanisms via haptics, visual and sound for the variety of users. In addition I worked closely with engineers to instruct the design I proposed and implement the prototypes directly in the vehicle. The project focused on Machine Sensing & Perception, LIDAR and camera sensors, remote and near physical and digital input modalities.

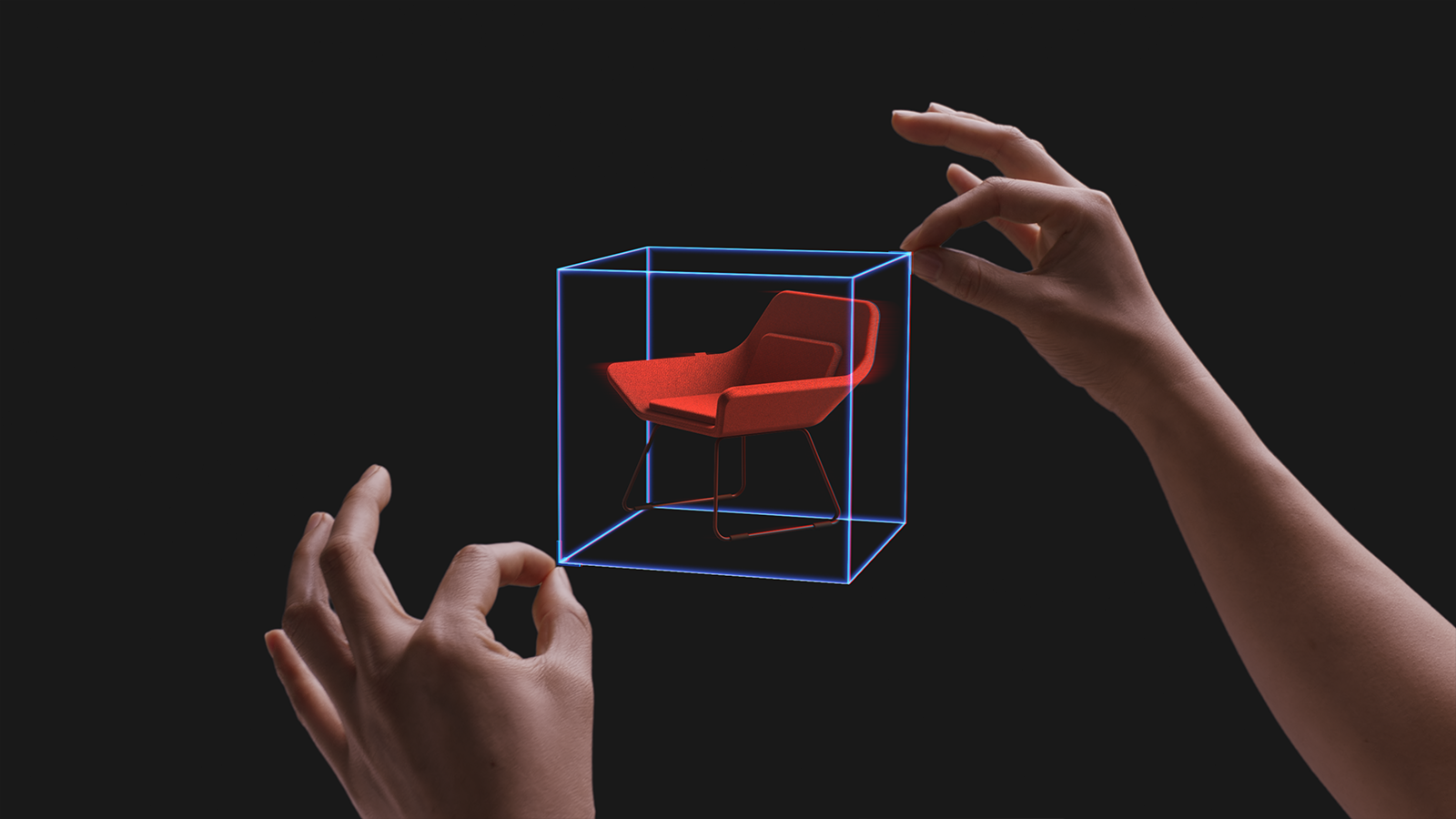

Augmented Intelligence & AI Case

IKEA Design and Research Lab

Space10

2019 - 2020

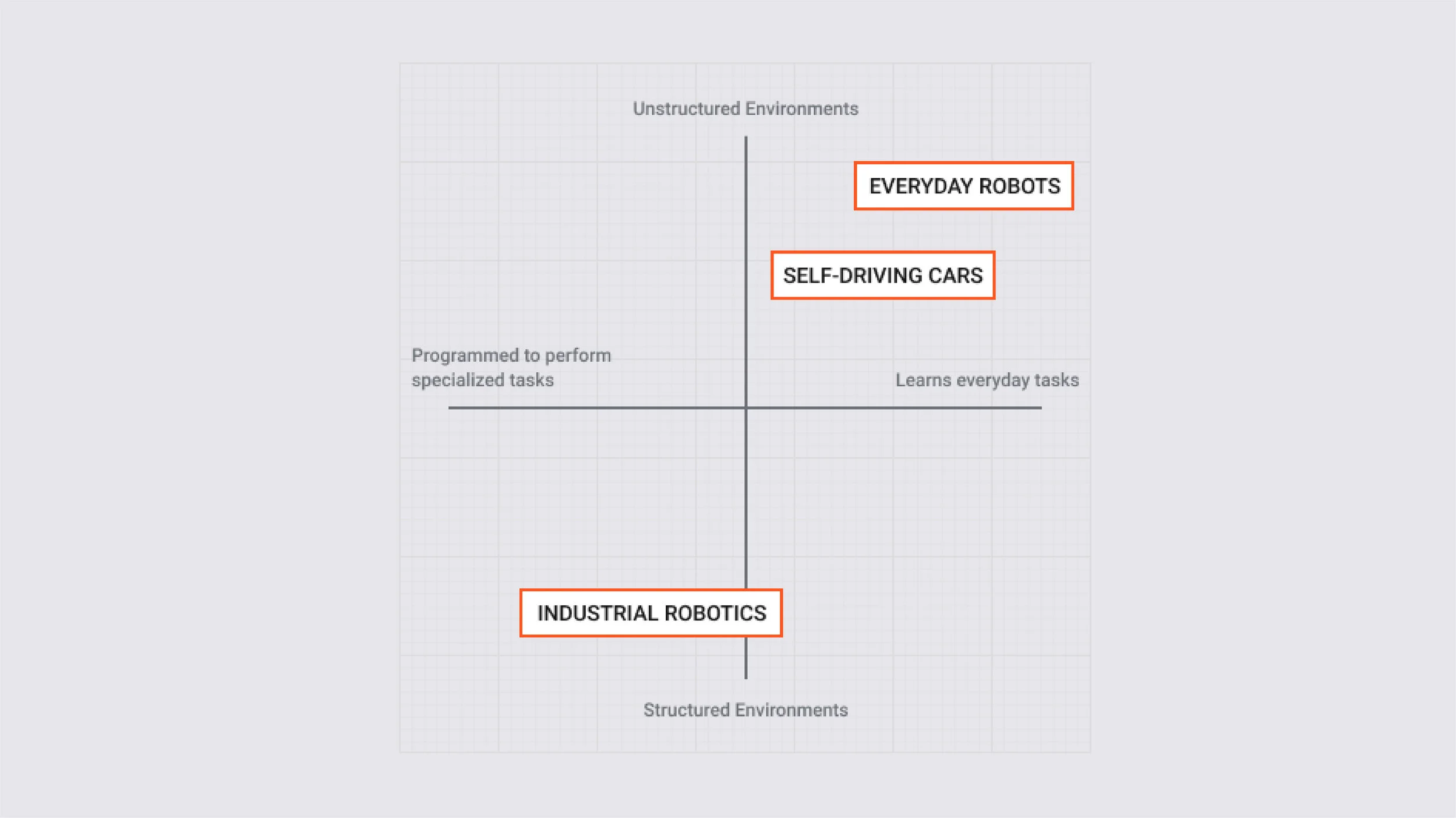

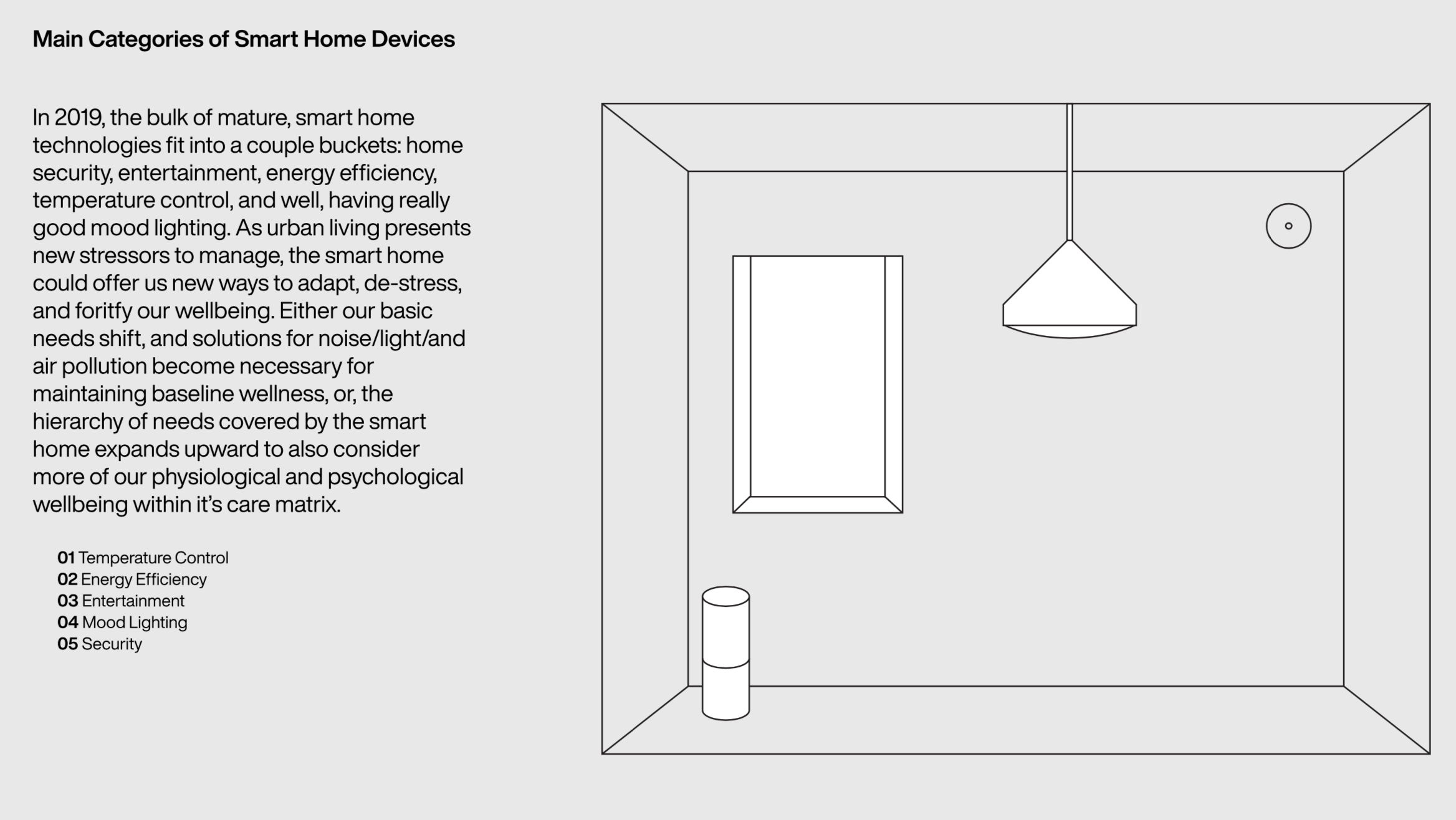

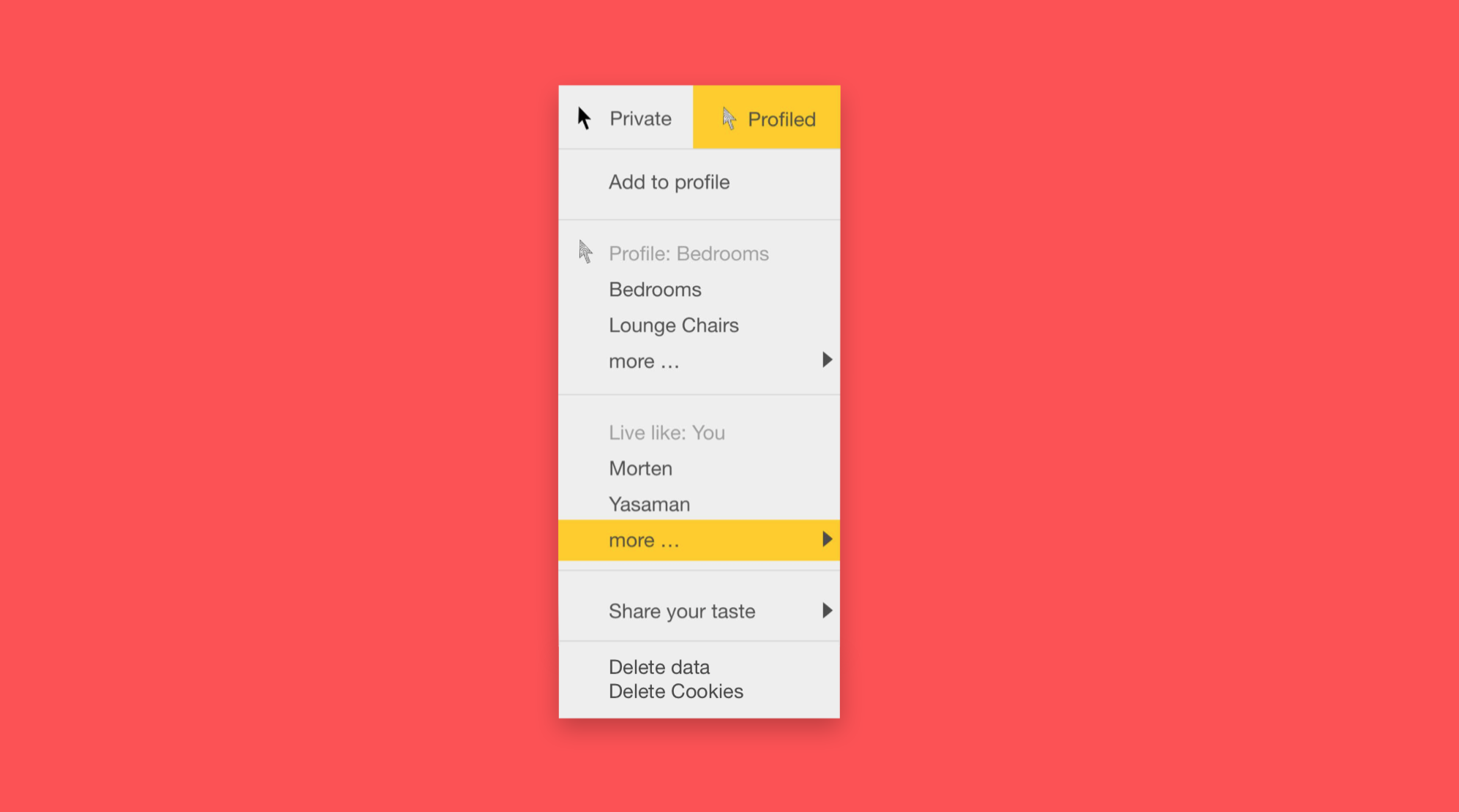

Working with IKEA and Space10 internal team to lead the frontier work of Spatial Computing, Augmented Intelligence and AI, my work included directing and initiating strategic projects that bring digital platforms and technologies to IKEA as it is uniquely set up to focus on the context of Home and Interior Spaces.

I directed the initial ideation and , briefs which were later handled and executed by producer, prototypes, topics of interest for projects and set the vision for internal and external collaborators consistent of brand studios, technologists, partner companies and organizations, designers, copywriters and project managers who would shape the Everyday Experiments platform to be launched early spring. The platform explores several tracks in technology explorations at the intersection of Machine Sensing & Perception, AI, Home and Interfaces of Trust. The Visual and technical execution by partner studios — All information found on the platform website.

↑ UX of Trust in Augmented Spaces & privacy

Paper Biosensor — Material Intelligence

Ginkgo Bioworks Lab

Life Sciences + Health

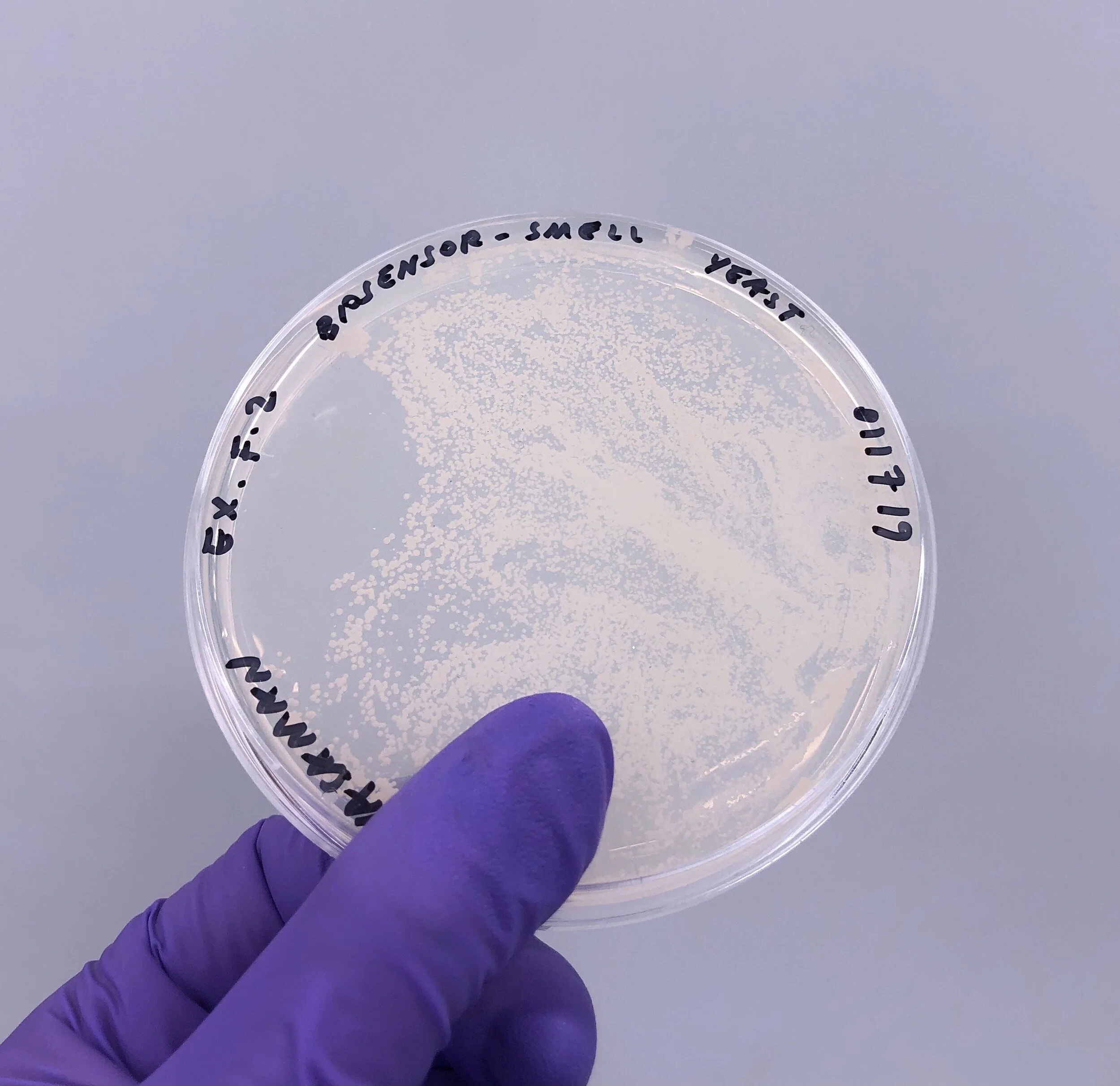

2018-2019

I worked with the world’s most cutting edge and advance biotechnology company Ginkgo Bioworks to direct and create paper based biosensors. Working with Wyss Institute for Biological Engineering, and with guidance from MIT, Purdy Lab and Director of BiosensorI created a set of affordance “analog” sensing wearables that through biosensor proteins can detect the presence of stress and hormone molecules, smell molecules, flavor molecules and toxins. History of analog based biosensors is vast, spanning transportable diagnostics. While building specialized biosensors in the lab with the help of scientists and synthetic biologists, I designed series of intelligent paper forms that explore the context beyond hospital in to the body as interactive surface, home & urban landscape as a relationship to the collective.

Design Debates

Curation + Direction

2019 — 2020

** DUE TO COVID-19 PANDEMIC The series has been put on hold until further notice**

Centered around knowledge building in emerging technologies, specifically in the context of well being I curated experts to engage in dialogues and workshops directing several studios, writers, thinkers, designers. The goal was alongside building and creating design, to actively question our own assumptions & biases. Specifically this project was also important to bring knowledge around machine learning, mixed reality, biotechnology, sensors with the self, community, home and planet in mind. The project has gotten recognition from IKEA as to push the Augmented Intelligence and AI case forward and high level, as well as helped internal teams at Space10 educate, and finally build local and global connections by scheduling to host in New Delhi, New York and Copenhagen securing collaborators such as Pioneer Works, Copenhagen Contemporary & CPH:DOX.

First I defined a technology stack, and then I turned it upside down to challenge the design thinking around sensor systems that have become so ubiquitous. I curated and directed conversations here in the following topics.

→ Inclusive Inputs ✳︎Context of India✳︎

→ Virtual Celebrities ✳︎Lil Miquela✳︎

→ Data as Reality? ✳︎Altered Meaning✳︎

→ Interfaces of Ecology ✳︎Non-human Scales✳︎

→ Materials as Sensors ✳︎Afforded Intelligence✳︎

→ Alternative Platforms of well being ✳︎Are.na✳︎

Through vehicles that educate, empower, publish, and by asking questions, I challenge myself to be a life long learner and expand on my artistic & design practice.

✱ I work with industry to imagine and create interactions that become realities.

✲ I also work with variety of Education institutions to teach design & art for emerging interfaces at the intersection of technology & science.

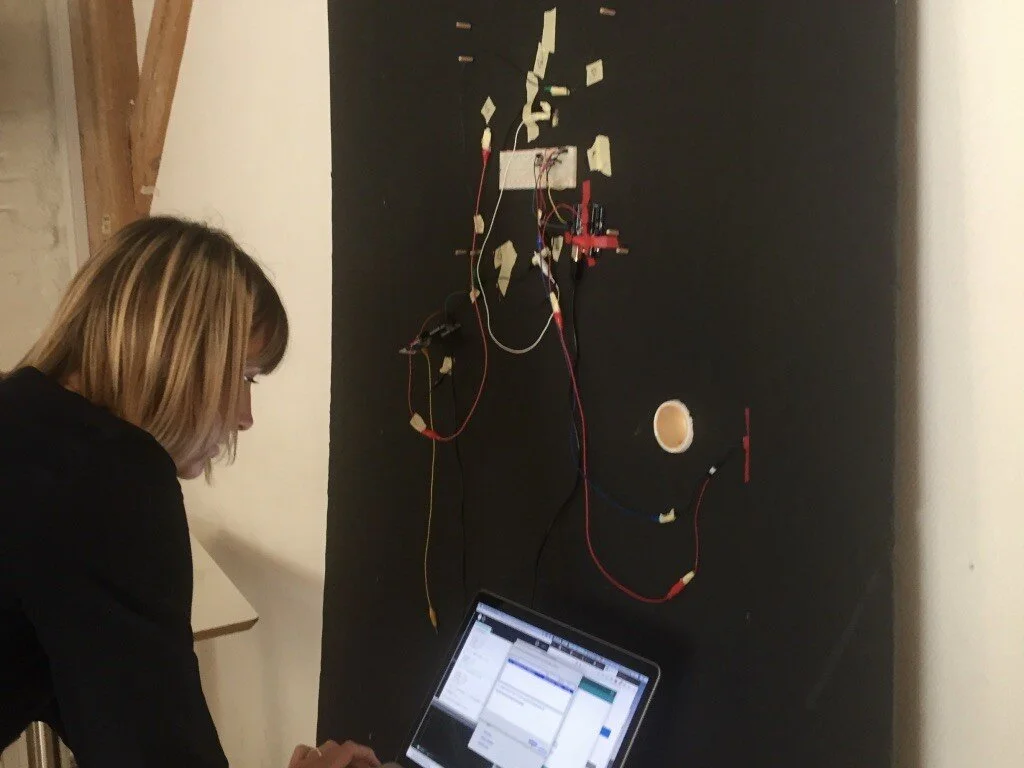

My students are incredibly hands-on, and I push them to test, fail and repeat. Below is a selection of projects and workshops with students of Copenhagen Institute for Interaction Design highlighting Sensing & Perception.

I am humbled to have been asked to speak & share my thoughts on Design as my practice expands.